inBook

2022

Repository Open Access

Information and complexity, or: Where is the information?

In: Complexity and emergence : Lake Como School of Advanced Studies, Italy, July 22-27, 2018 / Sergio Albeverio... (eds.)Cham : Springer, 2022. - pp. 87-105

(Springer proceedings in mathematics and statistics ; 383)

inJournal

2021

Journal Open Access

Confounding ghost channels and causality : a new approach to causal information flows

In: Vietnam journal of mathematics, 49 (2021) 2, pp. 547-576inBook

2020

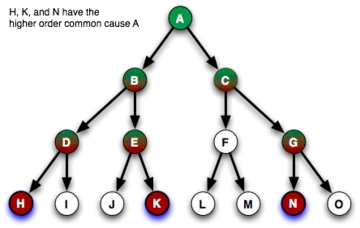

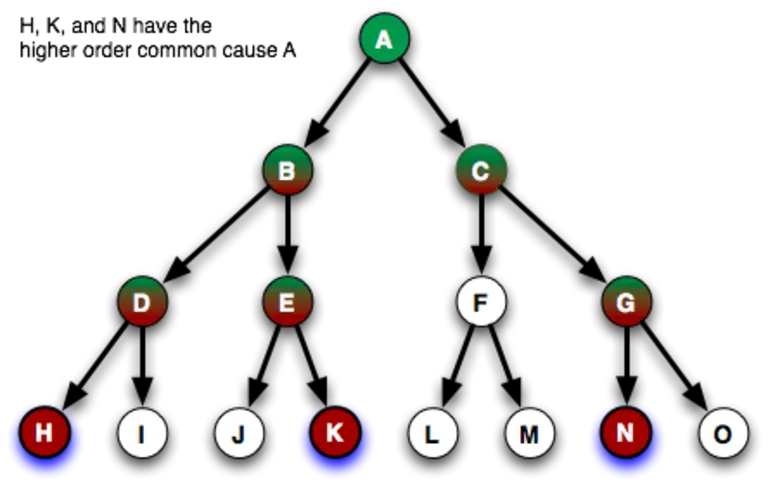

A numerical efficiency analysis of a common ancestor condition

In: Mathematical aspects of computer and information sciences : 8th international conference, MACIS 2019, Gebze-Istanbul, Turkey, November 13-15, 2019 ; revised selected papers / Daniel Slamanig... (eds.)Cham : Springer, 2020. - pp. 357-363

(Lecture notes in computer science ; 11989)

inJournal

2020

Journal Open Access

Information decomposition based on cooperative game theory

In: Kybernetika, 56 (2020) 5, pp. 979-1014inBook

2018

Repository Open Access

Computing the unique information

In: IEEE international symposium on information theory (ISIT) from June 17 to 22, 2018 at the Talisa Hotel in Vail, Colorado, USAPiscataway, NY : IEEE, 2018. - pp. 141-145

inJournal

2016

Journal Open Access

Information flow in learning a coin-tossing game

In: Nonlinear theory and its applications, 7 (2016) 2, pp. 118-125inJournal

2015

Journal Open Access

Information-theoretic inference of common ancestors

In: Entropy, 17 (2015) 4, pp. 2304-2327inJournal

2014

Journal Open Access

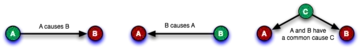

Discriminating between causal structures in Bayesian networks given partial observations

In: Kybernetika, 50 (2014) 2, pp. 284-295inJournal

2013

'More is different' in functional magnetic resonance imaging : a review of recent data analysis techniques

In: Brain Connectivity, 3 (2013) 3, pp. 223-239inBook

2012

A new common cause principle for bayesian networks

In: Proceedings of the 9th workshop on uncertainty processing WUPES '12 : Marianske Lazne, Czech Republik ; 12-15th September 2012Praha : Academy of Sciences of the Czech Republik / Institute of Information Theory and Automation, 2012. - pp. 149-162

inJournal

2012

Information-geometric approach to inferring causal directions

In: Artificial intelligence, 182/183 (2012), pp. 1-31inJournal

2012

Journal Open Access

On solution sets of information inequalities

In: Kybernetika, 48 (2012) 5, pp. 845-864inJournal

2010

Repository Open Access

Justifying additive noise model-based causal discovery via algorithmic information theory

In: Open systems and information dynamics, 17 (2010) 2, pp. 189-212inJournal

2009

A refinement of the common cause principle

In: Discrete applied mathematics, 157 (2009) 10, pp. 2439-2457inJournal

2008

Repository Open Access