Episode 11 — Our computational hardware over the years

Published Dec 14, 2021

Mathematicians are usually pictured contemplating in front of a blackboard with nothing but a piece of chalk and the sharpness of their mind to solve the most perplexing problems. In reality, though, at least some mathematical researchers need a few more tools at their disposal. At our Max Planck institute, these are not exorbitantly expensive experimental setups like particle colliders, giant telescopes, or intricate microscopes but come in the form of computing hardware.

The history of our “silicone-based abaci” mirrors the general move from individually powerful computers to more parallelized computing, which is realized in modern graphics processors with thousands of cores. While current smartphones outshine most of the computing servers of yesteryear, they were indispensable for the complex computational tasks of their time. Perhaps a look into the past can make you appreciate the modern technological marvel you rely on every day even more.

Compute Servers at MPI MiS

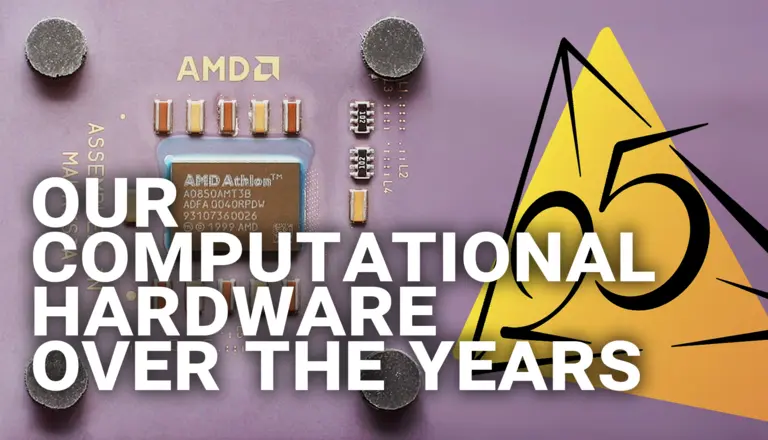

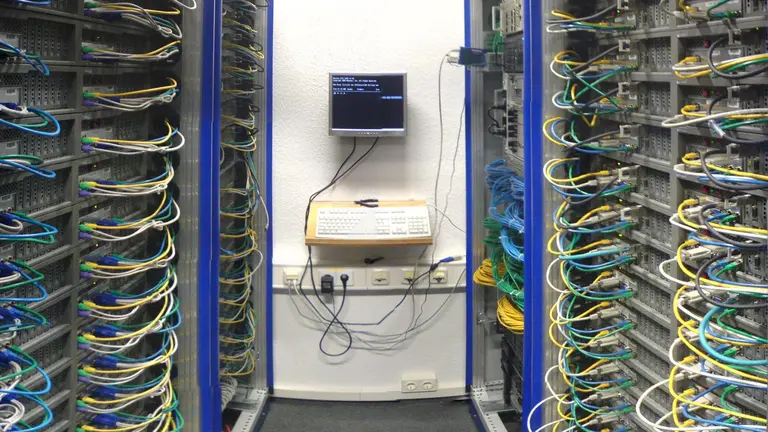

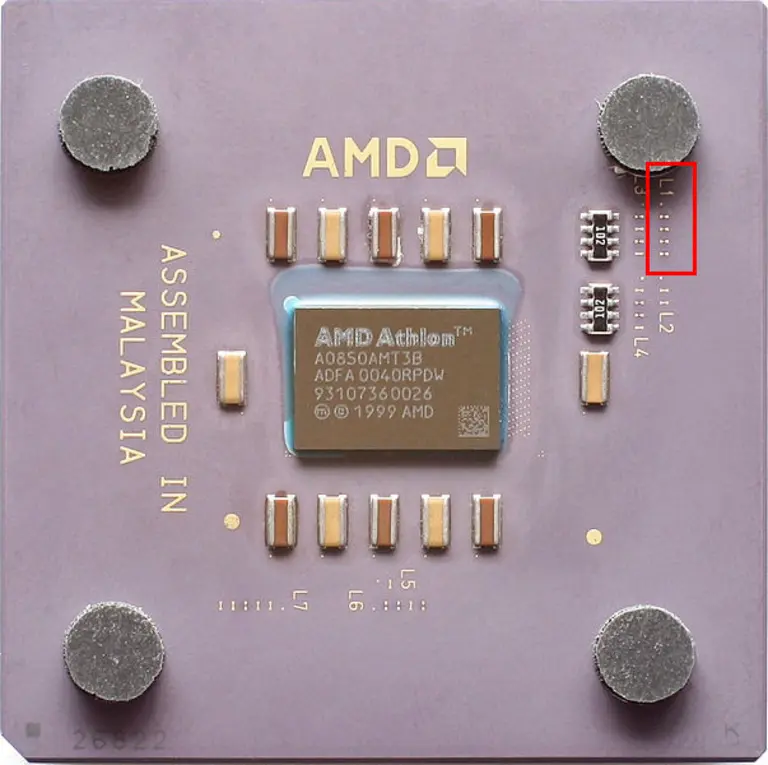

In the working group of Prof. Hackbusch, a self-constructed system consisting of 16 normal PCs was built. Such computer systems became popular in the 1990s and were called Beowulf clusters. (read more about them on Wikipedia). They were connected by a (gigabit) network, which is why distributed computing was also possible. Each individual PC had an AMD Athlon CPU with 900 MHz and 4 GB of RAM.

By the way: back then you could speed up the CPU with a pencil! On the processor, the clock frequency was controlled by by cutting small metal bridges (L1; top right). If you restored these connections, which was possible with the graphite of a pencil, you could fix the clock speed yourself.

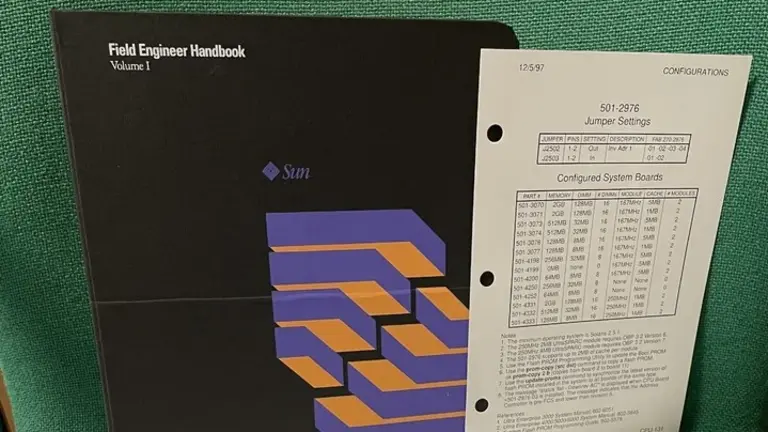

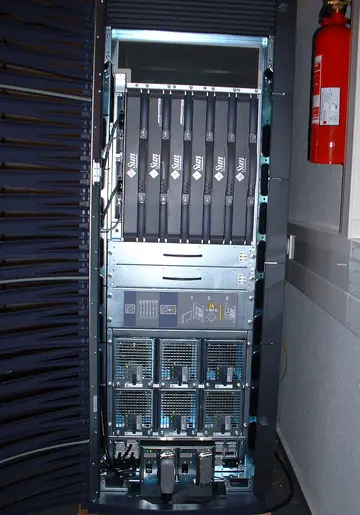

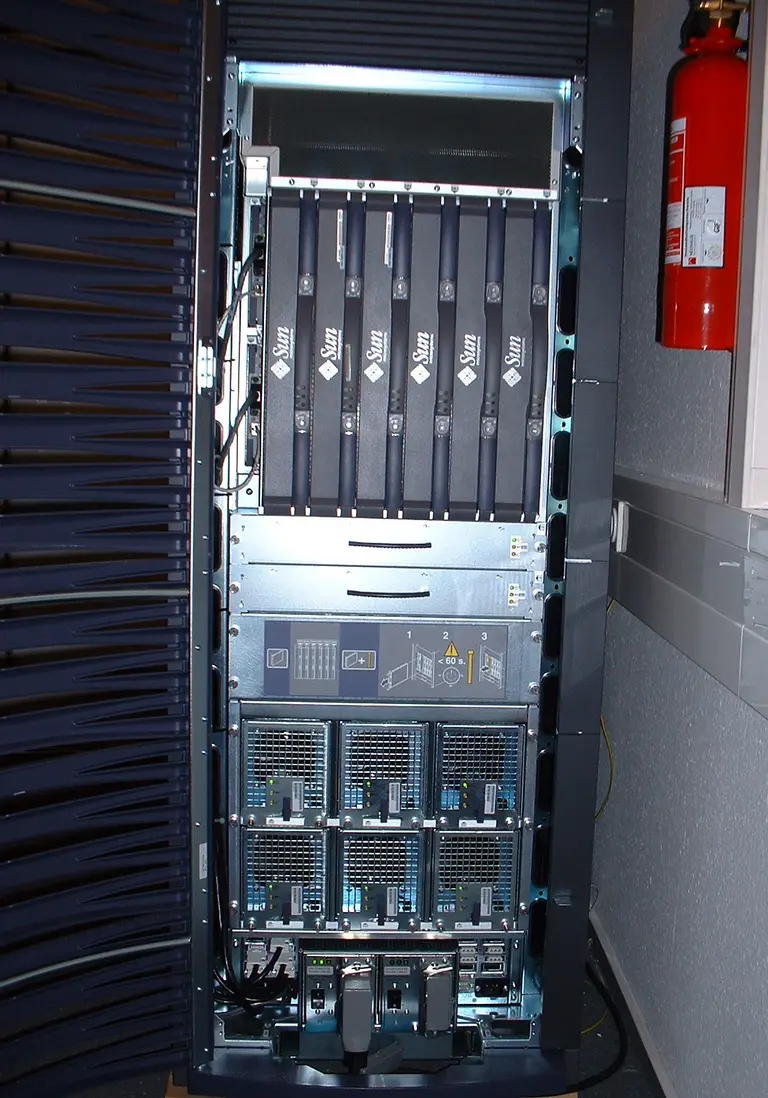

The next, large system to move in was a SunFire 6800 made by Sun. This had 24 CPUs of the type UltraSparc III with 900 MHz clock frequency and 96 GB of RAM. A major advantage of this system for parallel processing was that access to memory was virtually equally fast for all the CPUs, a feature which is difficult to realize.

Together with the Max Planck Institute for Evolutionary Anthropology (MPI EVA), we purchased a large cluster system of 106 servers. AMD Opteron CPUs were installed. 34 servers were also connected to a high-speed network (Infiniband). Each of these computers also had 16 GB of RAM at their disposal. The remaining 72 nodes each had 4 GB of memory and were connected via a normal gigabit network.

By the way: the MPI EVA used this system for the first steps in determining the Neanderthal genome.

In high-performance computing, the trend was more and more towards multiple, smaller servers instead of a very large single server. We followed this path from then on as well and purchased 5 Sun x4600 servers, each with 8 AMD Opteron CPUs composed of 2 CPU cores. The clock frequency was 2.4 GHz. Each computer also had 256 GB of RAM.

In terms of processors, the number of processing cores increased. The new system already used Hexa-Core CPUs, i.e. 6 CPU cores. It consisted of 8 servers of the type IBM dx360 M4 and each server had two processors, which meant it could run 12 programs simultaneously. Each computer also had 128 GB of RAM. The computers were again connected via a high-speed network (Infiniband). In addition, we employed 3 accelerator cards of the Intel Xeon-Phi type.

The server landscape is now very heterogeneous. For example, we have a cluster of 24 servers, each of which has 24 CPU cores and 128 GB of RAM. In addition, there are also 4 servers, each with 64 CPU cores and 3 TB of RAM and another two systems with 128 CPU cores each.

Accelerator cards are also playing an increasingly important role. We currently use 6 Nvidia cards. If we add up all our 54 compute servers, there are currently 1818 CPU cores and 35072 GB of memory. However, this still places us among the smaller installations within the Max Planck Society.

25 Years MPI for Mathematics in the Sciences 25 Years MPI for Mathematics in the Sciences

All other episodes of our column can be found here.

Editorial Contact

Related Content

Episode 12 — Felix Otto and our commitment to the future Episode 12 — Felix Otto and our commitment to the future

Episode 9 — Eberhard Zeidler Library Episode 9 — Eberhard Zeidler Library