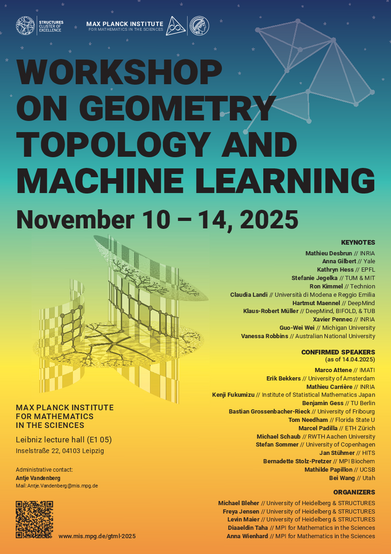

Workshop on Geometry, Topology, and Machine Learning (GTML 2025)

Registration is now closed.

The Geometry and Topology in Machine Learning (GTML) workshop brings together two rapidly evolving fields central to modern machine learning. Geometry and topology provide essential methods for describing data structure and frameworks for analyzing, unifying, and generalizing machine learning techniques to new settings.

The workshop will feature 10 keynote talks and 20 presentations by leading experts. By merging the Workshop on Geometry in Machine Learning (GaML) and the Workshop on Topological Methods in Data Analysis (TMDA), GTML creates a platform to foster collaboration and explore the interplay between geometry, topology, and machine learning.

GTML 2025 is organized jointly by the Max Planck Institute for Mathematics in the Sciences and the STRUCTURES Cluster of Excellence.

Focus:

Topics of interest include, but are not limited to, the following:

- Mathematical foundations of machine learning

- Geometric machine learning (e.g., geometric deep learning, graph neural networks, geometry processing)

- Topological machine learning (e.g., topological deep learning, topological data analysis (TDA), shape analysis)

- Applications of geometry and topology in machine learning (e.g., in life sciences and complex systems)

Sessions:

- Foundations of Machine Learning I and II

- Geometric Deep Learning

- Geometry Processing/Geometric Data Analysis

- Geometric Statistics

- Shape Analysis

- Topological Deep Learning

- Topological Data Analysis I, II, and III

Confirmed Speakers:

- Keynotes:

- Mathieu Desbrun (INRIA)

- Anna Gilbert (Yale)

- Kathryn Hess (EPFL)

- Stefanie Jegelka (TUM & MIT)

- Ron Kimmel (Technion)

- Claudia Landi (University of Modena and Reggio Emilia)

- Hartmut Maennel (DeepMind)

- Klaus-Robert Müller (DeepMind, BIFOLD, & TU Berlin)

- Xavier Pennec (INRIA)

- Vanessa Robbins (Australian National University)

- Guo-Wei Wei (MSU)

- Talks:

- Oleg Arenz (TU Darmstadt)

- Marco Attene (IMATI)

- Erik Bekkers (University of Amsterdam)

- Mathieu Carrière (INRIA)

- Kenji Fukumizu (Institute of Statistical Mathematics Japan)

- Banjamin Gess (TU Berlin)

- Bastian Grossenbacher-Rieck (University of Fribourg)

- Lukas Hahn (Deepshore)

- Musafa Hajij (USFCA)

- Søren Hauberg (TU Denmark)

- Guido Montúfar (MPI MiS, UCLA)

- Tom Needham (Florida State University)

- Marcel Padilla (ETH Zurich)

- Mathilde Papillon (UC Santa Barbara)

- Michael Schaub (RWTH Aachen University)

- Stefan Sommer (University of Copenhagen)

- Vincent Stimper (Isomorphic Labs)

- Jan Stühmer (HITS)

- Bernadette Stolz-Pretzer (MPI for Biochemistry)

- Alice Barbora Tumpach (Wolfgang Pauli Institute)

- Bei Wang (Utah)

Deadline:

-

You can apply to present a lightning talk. See the registration page for more information. The application deadline is May 31, 2025.

WARNING: Please be wary if a company directly contacts you regarding payment for accommodation in Leipzig: this is fraud / phishing.

Previous Workshops:

- 2nd Workshop on Geometry and Machine Learning (GaML-23)

- 4th Workshop on Topological Methods in Data Analysis (TMDA-23)

- 1st Workshop on Geometry and Machine Learning (GaML-22)

- 3rd Workshop on Topological Methods in Data Analysis (TMDA-22)

- 2nd Workshop on Topological Methods in Data Analysis (TMDA-21)

- 1st Workshop on Topological Methods in Data Analysis (TMDA-20)