inBook

2020

Repository Open Access

Deep Ritz revisited

In: ICLR 2020 workshop on integration of deep neural models and differential equations : Millennium Hall, Addis Ababa, Ethiopia ; 26th April 2020[S. L.] : ICLR, 2020.

inJournal

2022

Repository Open Access

Natural reweighted wake-sleep

In: Neural networks, 155 (2022), pp. 574-591MiS Preprint

2020

Repository Open Access

Natural Wake-Sleep Algorithm

inJournal

2023

Journal Open Access

On the locality of the natural gradient for learning in deep Bayesian networks

In: Information geometry, 6 (2023) 1, pp. 1-49inBook

2020

Repository Open Access

On the space-time expressivity of ResNets

In: ICLR 2020 workshop on integration of deep neural models and differential equations : Millennium Hall, Addis Ababa, Ethiopia ; 26th April 2020[S. L.] : ICLR, 2020.

inBook

2019

Repository Open Access

A continuity result for optimal memoryless planning in POMDPs

In: RLDM 2019 : 4th multidisciplinary conference on reinforcement learning and decision making ; July 7-10, 2019 ; Montréal, CanadaMontréal, Canada : University, 2019. - pp. 362-365

inBook

2019

Repository Open Access

Task-agnostic constraining in average reward POMDPs

In: Task-agnostic reinforcement learning : workshop at ICLR, 06 May 2019, New Orleans[S. L.] : ICLR, 2019.

Preprint

2018

Repository Open Access

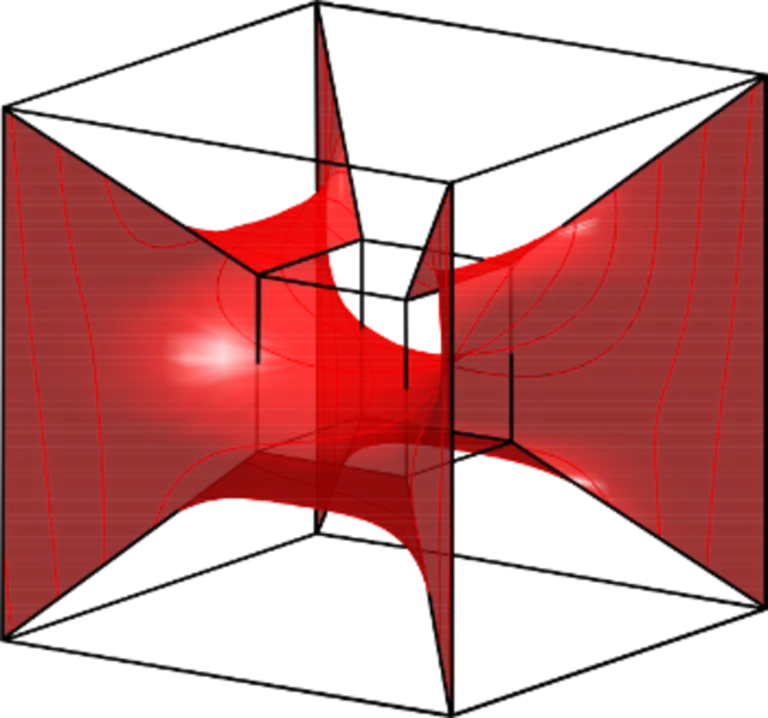

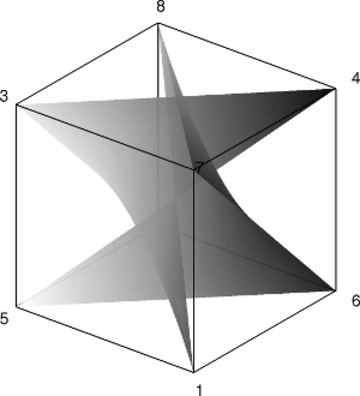

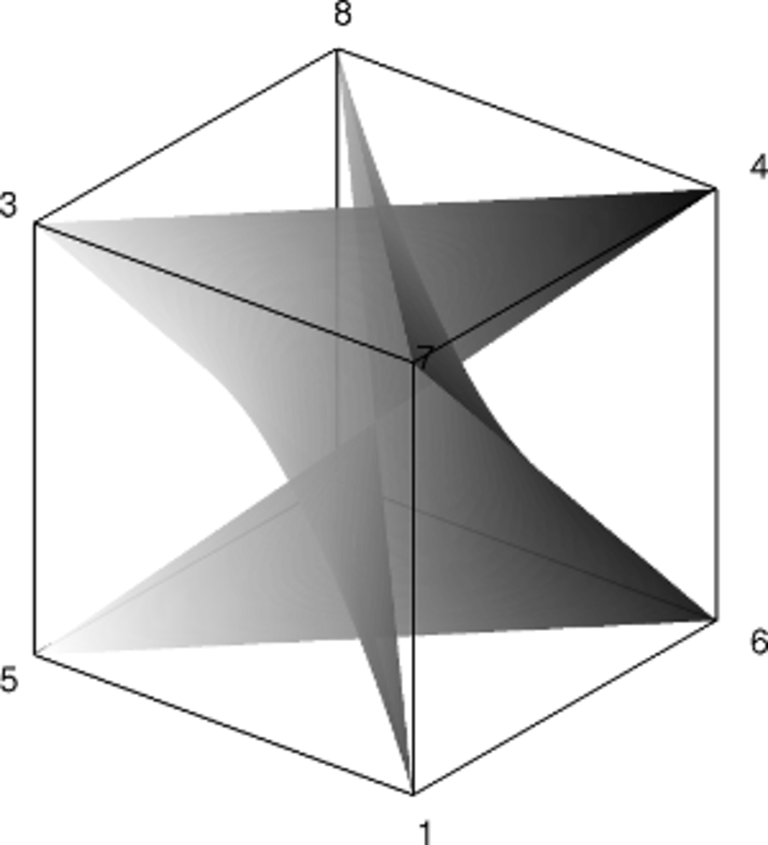

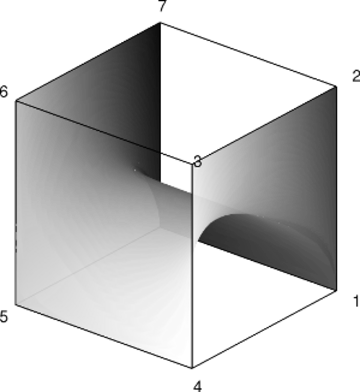

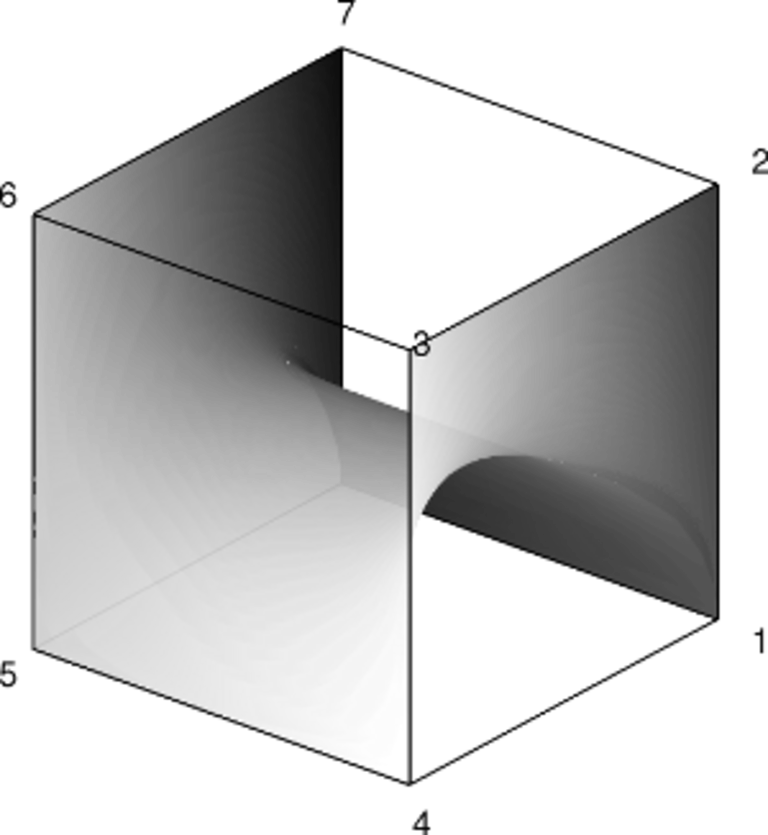

Illustration of maxout layer upper bound [Suppl. to: On the number of linear regions of deep neural networks]

inBook

2018

Repository Open Access

Uncertainty and stochasticity of optimal policies

In: Proceedings of the 11th workshop on uncertainty processing WUPES '18, June 6-9, 2018 / Václav Kratochvíl (ed.)Praha : MatfyzPress, 2018. - pp. 133-140

inJournal

2017

Journal Open Access

Dimension of marginals of Kronecker product models

In: SIAM journal on applied algebra and geometry, 1 (2017) 1, pp. 126-151inBook

2017

Repository Open Access

Geometry of policy improvement

In: Geometric science of information : Third International Conference, GSI 2017, Paris, France, November 7-9, 2017, proceedings / Frank Nielsen... (eds.)Cham : Springer, 2017. - pp. 282-290

(Lecture notes in computer science ; 10589)

inBook

2015

Repository Open Access

Hierarchical models as marginals of hierarchical models

In: Proceedings of the 10th workshop on uncertainty processing WUPES '15, Moninec, Czech Republic, September 16-19, 2015 / Václav Kratochvíl (ed.)Praha : Oeconomica, 2015. - pp. 131-145

inBook

2017

Repository Open Access

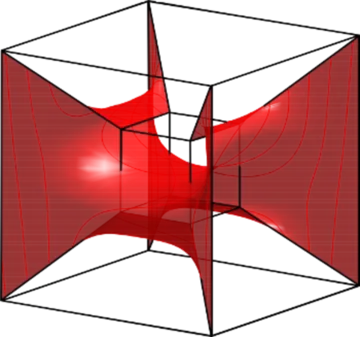

Notes on the number of linear regions of deep neural networks

In: 2017 international conference on sampling theory and applications (SampTA) / Gholamreza Anbarjafari... (eds.)Piscataway, NJ : IEEE, 2017. - pp. 156-159

inJournal

2017

Repository Open Access

Restricted Boltzmann machines [In: Algebraic statistics ; 16 April - 22 April 2017 ; report no. 20/2017]

In: Oberwolfach reports, 14 (2017) 2, pp. 1241-1242inBook

2015

Repository Open Access

Mode poset probability polytopes

In: Proceedings of the 10th workshop on uncertainty processing WUPES '15, Moninec, Czech Republic, September 16-19, 2015 / Václav Kratochvíl (ed.)Praha : Oeconomica, 2015. - pp. 147-154

inBook

2015

Repository Open Access

A comparison of neural network architectures

In: Deep learning Workshop, ICML '15, Vauban Hall at Lille Grande Palais, France, July 10 and 11, 20152015.

inJournal

2015

Journal Open Access

A theory of cheap control in embodied systems

In: PLoS computational biology, 11 (2015) 9, e1004427inBook

2015

Repository Open Access

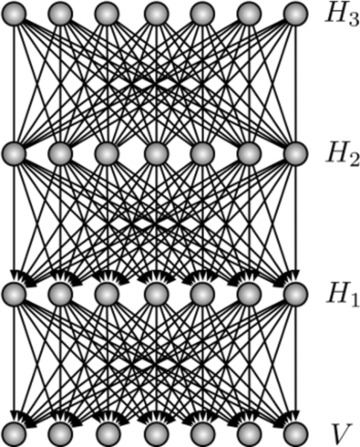

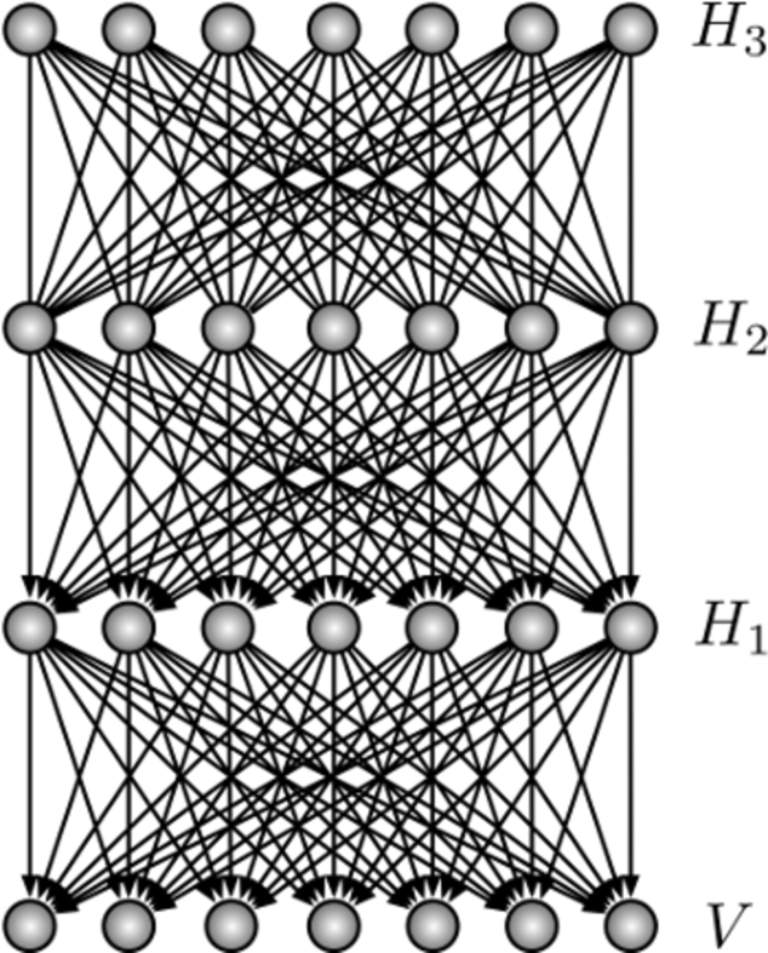

Deep narrow Boltzmann machines are universal approximators

In: Third international conference on learning representations - ICLR 2015 : May 7-9 2015, San Diego, CA. USASan Diego : ICLR, 2015.

inJournal

2015

Journal Open Access

Discrete restricted Boltzmann machines

In: Journal of machine learning research, 16 (2015), pp. 653-672inJournal

2015

Journal Open Access

Geometric design principles for brains of embodied agents

In: Künstliche Intelligenz : KI, 29 (2015) 4, pp. 389-399Preprint

2015

Repository Open Access

Geometry and determinism of optimal stationary control in partially observable Markov decision processes

inJournal

2015

Journal Open Access

Geometry and expressive power of conditional restricted Boltzmann machines

In: Journal of machine learning research, 16 (2015), pp. 2405-2436inJournal

2017

Repository Open Access

Hierarchical models as marginals of hierarchical models

In: International journal of approximate reasoning, 88 (2017), pp. 531-546Preprint

2015

Repository Open Access

Universal approximation of Markov kernels by shallow stochastic feedforward networks

inJournal

2015

Repository Open Access

When does a mixture of products contain a product of mixtures?

In: SIAM journal on discrete mathematics, 29 (2015) 1, pp. 321-347inBook

2014

Geometry of hidden-visible products of statistical models

In: Algebraic Statistics 2014 : May 19-22Chicago, IL : Illinois Institute of Technology, 2014.

inJournal

2014

Journal Open Access

On the Fisher metric of conditional probability polytopes

In: Entropy, 16 (2014) 6, pp. 3207-3233inBook

2014

Repository Open Access

On the number of inference regions of deep feed forward networks with piece-wise linear activations

In: Second international conference on learning representations - ICLR 2014 : 14-16 April 2014, Banff, CanadaBanff : ICLR, 2014.

inBook

2014

Repository Open Access

On the number of linear regions of deep neural networks

In: NIPS 2014 : Proceedings of the 27th international conference on neural information processing systems - volume 2 ; Montreal, Quebec, Canada, December 8th-13thCambridge, MA : MIT Press, 2014. - pp. 2924-2932

inJournal

2014

Repository Open Access

Robustness, canalyzing functions and systems design

In: Theory in biosciences, 133 (2014) 2, pp. 63-78inJournal

2014

Journal Open Access

Scaling of model approximation errors and expected entropy distances

In: Kybernetika, 50 (2014) 2, pp. 234-245inJournal

2014

Repository Open Access

Universal approximation depth and errors of narrow belief networks with discrete units

In: Neural computation, 26 (2014) 7, pp. 1386-1407inBook

2013

Repository Open Access

Maximal information divergence from statistical models defined by neural networks

In: Geometric science of information : first international conference, GSI 2013, Paris, France, August 28-30, 2013. Proceedings / Frank Nielsen... (eds.)Berlin [u. a.] : Springer, 2013. - pp. 759-766

(Lecture notes in computer science ; 8085)

inJournal

2013

Journal Open Access

Mixture decompositions of exponential families using a decomposition of their sample spaces

In: Kybernetika, 49 (2013) 1, pp. 23-39inBook

2013

Repository Open Access

Selection criteria for neuromanifolds of stochastic dynamics

In: Advances in cognitive neurodynamics III : proceedings of the 3rd International Conference on Cognitive Neurodynamics 2011 ; [June 9-13, 2011, Hilton Niseko Village, Hokkaido, Japan] / Yoko Yamaguchi (ed.)Dordrecht : Springer, 2013. - pp. 147-154

(Advances in cognitive neurodynamics)

Academic

2012

On the expressive power of discrete mixture models, restricted Boltzmann machines, and deep belief networks - a unified mathematical treatment

Dissertation, Universität Leipzig, 2012inBook

2012

Repository Open Access

Scaling of model approximation errors and expected entropy distances

In: Proceedings of the 9th workshop on uncertainty processing WUPES '12 : Marianske Lazne, Czech Republik ; 12-15th September 2012Praha : Academy of Sciences of the Czech Republik / Institute of Information Theory and Automation, 2012. - pp. 137-148

inBook

2011

Repository Open Access

Expressive power and approximation errors of restricted Boltzmann machines

In: Advances in neural information processing systems 24 : NIPS 2011 ; 25th annual conference on neural information processing systems 2011, Granada, Spain December 12th - 15th / John Shawe-Taylor (ed.)La Jolla, CA : Neural Information Processing Systems, 2011. - pp. 415-423

inJournal

2011

Repository Open Access

Refinements of universal approximation results for deep belief networks and restricted Boltzmann machines

In: Neural computation, 23 (2011) 5, pp. 1306-1319inBook

2010

Repository Open Access

Mixture models and representational power of RBM's, DBN's, and DBM's

In: NIPS 2010 : Deep learning and unsupervised feature learning workshop ; December 19, 2010, Hilton, Vancouver, Canada[s. l.] : NIPS, 2010. - pp. 1-9

Academic

2010

Repository Open Access

On boundaries of statistical models

Dissertation, Universität Leipzig, 2010inJournal

2006

Journal Open Access

Maximizing multi-information

In: Kybernetika, 42 (2006) 5, pp. 517-538Academic

2001